Affiliate links on Android Authority may earn us a commission. Learn more.

Best of Android: How we test displays

There’s no shortage of strong opinions on display tech online, but we’re able to objectively test it! There’s a lot of hyperbole and… somewhat manufactured vitriol out there, so we’re interested in sifting through what science has to say.

How we test

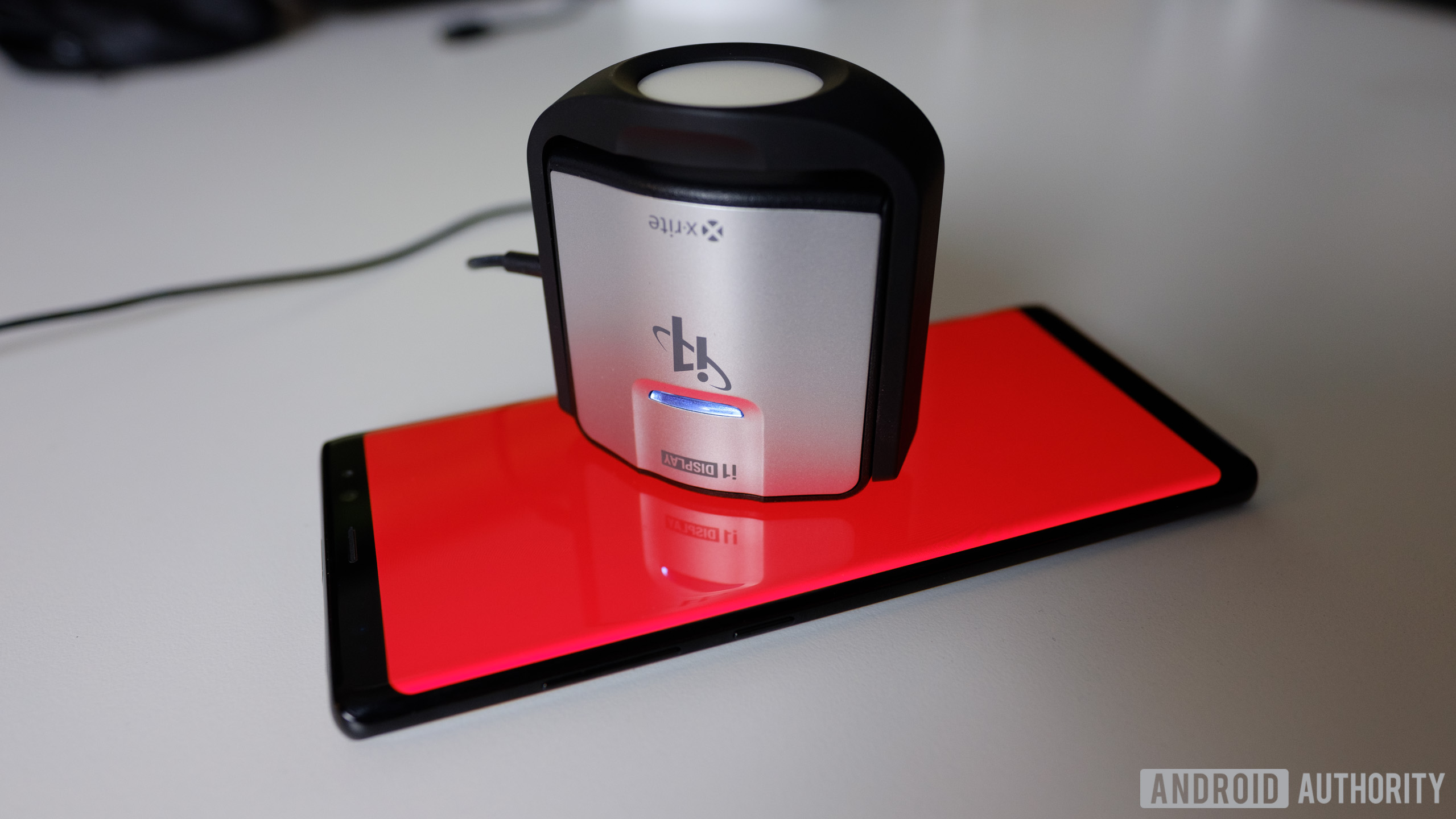

In order to test displays accurately, we want to determine the best possible results from each smartphone. Consequently, we test a fair bit more than just a few saturation sweeps on the default screen mode. By using a photospectrometer and industry-standard software from Portrait Displays, we can quickly earn a wealth of performance data by performing over a hundred individual readings.

This software is able to coordinate an app on the phone with a photospectrometer to put each display — be it OLED, LCD, or otherwise — through its paces. Because displays are often calibrated to meet different color spaces, it’s our policy to adjust our testing to fit the phone’s intended standards, so errors are weighed against the correct color gamuts, gamma, and the like. This way, displays calibrated for DCI-P3 aren’t measured against an sRGB gamut and so on.

Using CalMAN, we can correctly measure color accuracy, gamma, brightness, and more. In our deep dive reviews, you can see trouble spots that we point out. But not to worry, we can contextualize all the results for you in easy-to-digest charts and comparisons.

What we measure

Consequently, the math is a little difficult when comparing different results, so we take special care to take this into account. Our system makes this easy, and SpectraCal’s software makes the job almost automatic, as all we have to do is run the tests and export the data.

We measure:

- Color temperature

- Color gamut

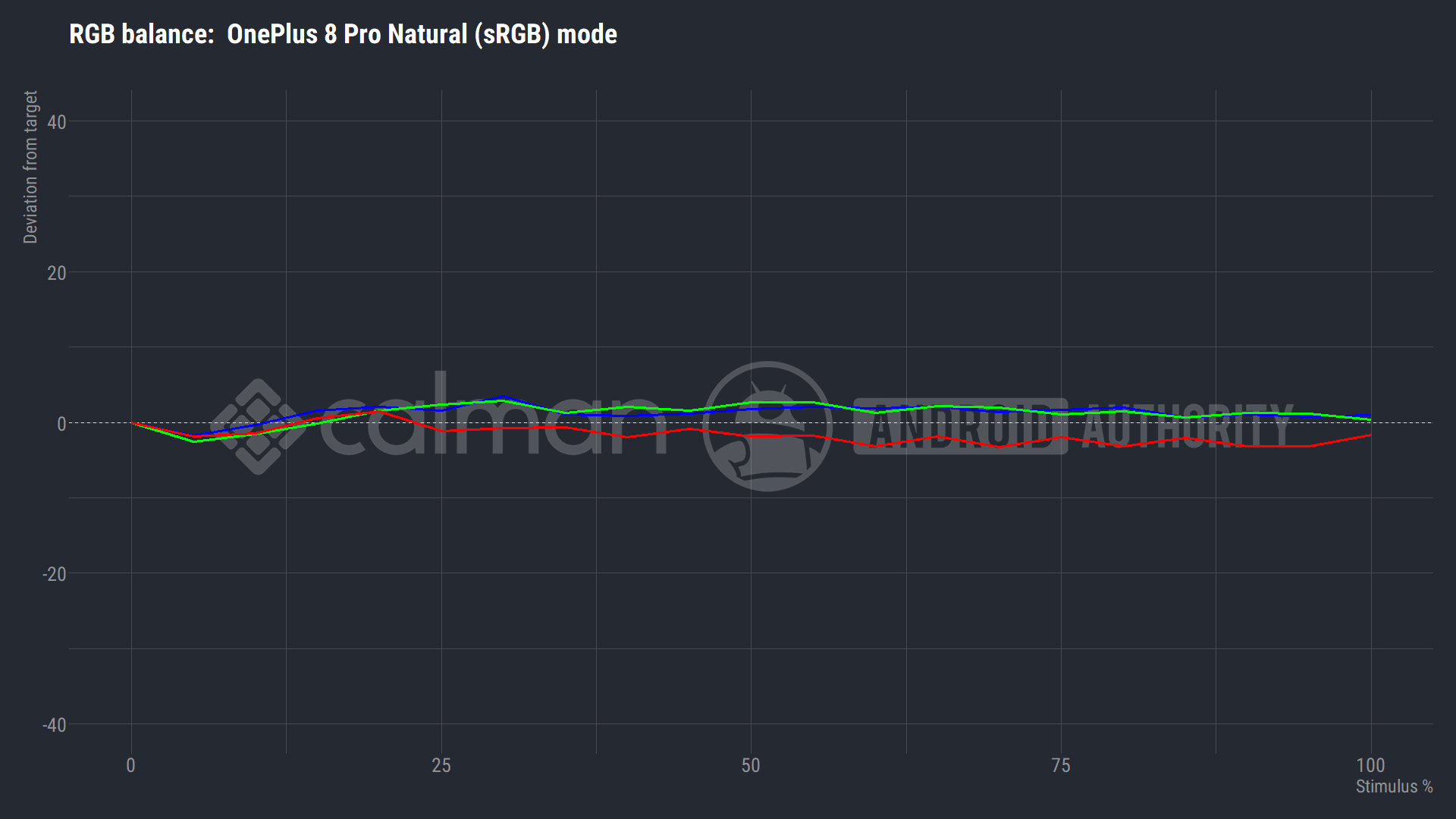

- RGB balance

- White point shift (CCT)

- Pixel density

- Peak brightness

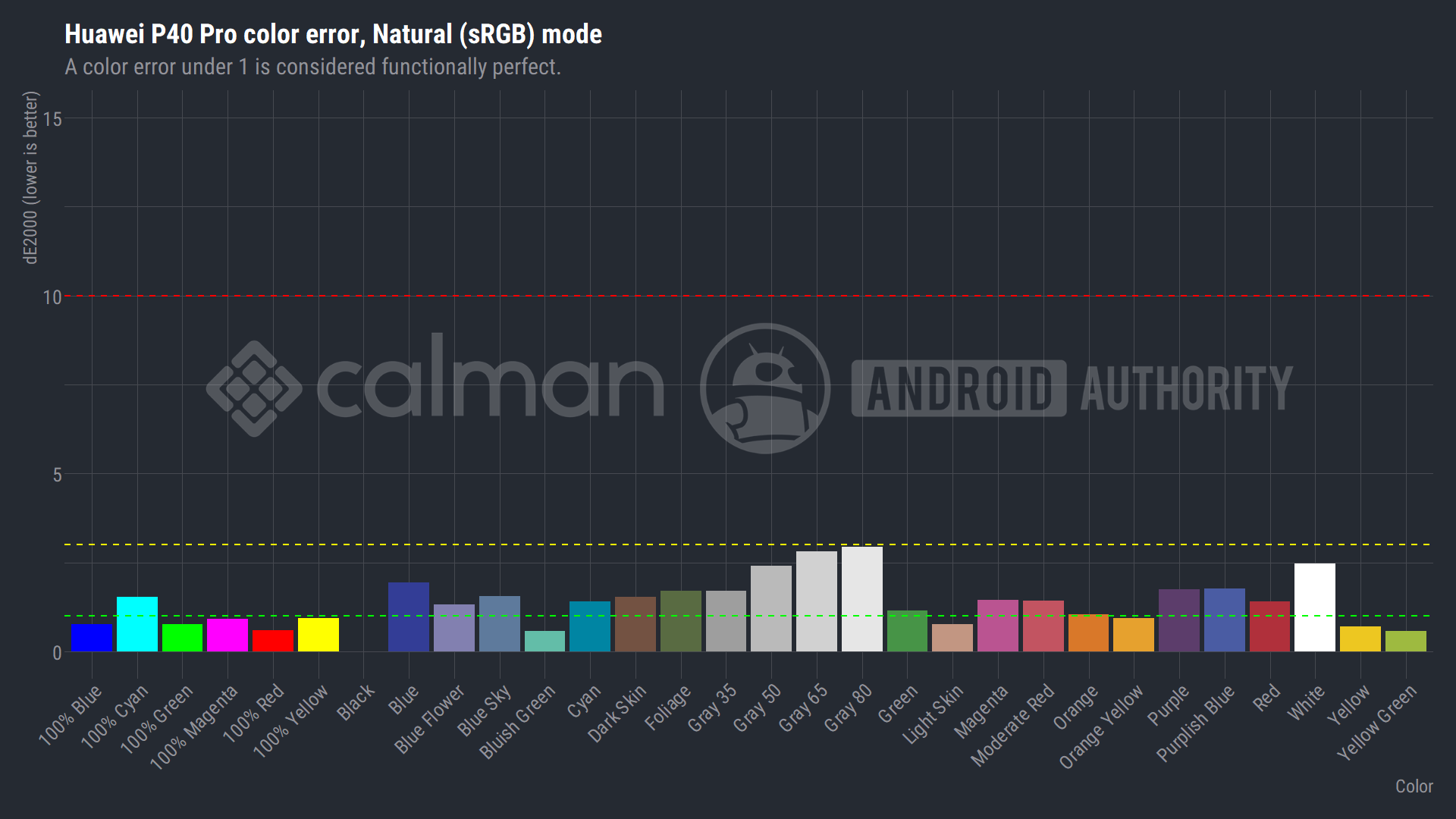

- Color error (DeltaE2000, DeltaEITP)

- Greyscale error

- Alternate display modes

We also make sure to run every test for both the default display mode and the best-performing display modes for each phone. This way, we’re both fair and accurate to what the manufacturer is trying to achieve with their devices. We also provide some guidelines in the charts we publish to let you know what you can easily see (errors above red), might notice a small error (anything above yellow), or would need a trained eye to see (anything above green).

We find that even if two phones have the same hardware, the software tuning by the manufacturers will often be different enough that you can see it. By testing each display and not relying on a specs list, we can make sure you know what you’re getting into when you look for a phone with a good screen.